Do you want Google to de-index your site? That\’s easy! Here\’s how.

Note: That was just to get your attention. We would never even dream of search engines penalizing your site.

A common black hat SEO practice is posting the same content on several pages across different websites in an attempt to improve page views. Sites with duplicate content run the risk of getting removed from the SERPs, or at the very least, plummeting to the bottom of the results.

Publish unique articles only. If someone steals your content, notify Google, and check the Internet regularly for sites that scraped your content.

Let\’s be clear on another thing: you will not be penalized if you duplicate your content and place it within the same site on tag, archive and category pages. This is called internal duplication. It doesn\’t make sense to penalize you for stealing your own property.

A common black hat SEO practice is posting the same content on several pages across different websites in an attempt to improve page views. Sites with duplicate content run the risk of getting removed from the SERPs, or at the very least, plummeting to the bottom of the results.

Publish unique articles only. If someone steals your content, notify Google, and check the Internet regularly for sites that scraped your content.

Let\’s be clear on another thing: you will not be penalized if you duplicate your content and place it within the same site on tag, archive and category pages. This is called internal duplication. It doesn\’t make sense to penalize you for stealing your own property.

Source

Some site owners and webmasters unintentionally stuff their pages with keywords, particularly newbie SEO practitioners. They focus on on-page SEO too much that they forget about Google\’s rules.

Google always puts user experience first, improving their search engine for Internet users instead of marketers. That\’s the idea behind the latest algorithm updates and the \”no stuffing\” rule. Keyword stuffing tends to make an article sound sloppy and ill-written.

Things have changed, and the acceptable density is now 2%, whereas it used to be 3-4% use of keywords for every article. Also, latent semantic indexing (LSI) allows Google to look at the meaning of content instead of blindly crawling the Web for keywords. This means if you sprinkle your pages with synonyms of your keywords, you won\’t have to practice stuffing.

5. Automated Content

I\’m talking about nonsensical content churned out by machines, like automatically spun articles that look like random words haphazardly strewn together.

Some spun articles can pass inspection if they have good quality, like manually spun articles for example. As a webmaster, site owner or SEO expert, it should be simple to tell a well-written article from a jumble of words that don\’t make sense.

What black hat SEOs usually do is scrape existing content, feed it to an automatic spinner, and re-publishing it.

Source

Some site owners and webmasters unintentionally stuff their pages with keywords, particularly newbie SEO practitioners. They focus on on-page SEO too much that they forget about Google\’s rules.

Google always puts user experience first, improving their search engine for Internet users instead of marketers. That\’s the idea behind the latest algorithm updates and the \”no stuffing\” rule. Keyword stuffing tends to make an article sound sloppy and ill-written.

Things have changed, and the acceptable density is now 2%, whereas it used to be 3-4% use of keywords for every article. Also, latent semantic indexing (LSI) allows Google to look at the meaning of content instead of blindly crawling the Web for keywords. This means if you sprinkle your pages with synonyms of your keywords, you won\’t have to practice stuffing.

5. Automated Content

I\’m talking about nonsensical content churned out by machines, like automatically spun articles that look like random words haphazardly strewn together.

Some spun articles can pass inspection if they have good quality, like manually spun articles for example. As a webmaster, site owner or SEO expert, it should be simple to tell a well-written article from a jumble of words that don\’t make sense.

What black hat SEOs usually do is scrape existing content, feed it to an automatic spinner, and re-publishing it.

Low-quality content in general is more likely to do poorly on Google because that\’s the aim of Panda and Penguin — to weed out low-value pages and prioritize highly substantial content. Even if Google is still hard at work in clamping down these websites, future algorithm changes might make it easier to filter out webpages with thin content.

Low-quality content in general is more likely to do poorly on Google because that\’s the aim of Panda and Penguin — to weed out low-value pages and prioritize highly substantial content. Even if Google is still hard at work in clamping down these websites, future algorithm changes might make it easier to filter out webpages with thin content.

1. Duplicate Content

A common black hat SEO practice is posting the same content on several pages across different websites in an attempt to improve page views. Sites with duplicate content run the risk of getting removed from the SERPs, or at the very least, plummeting to the bottom of the results.

Publish unique articles only. If someone steals your content, notify Google, and check the Internet regularly for sites that scraped your content.

Let\’s be clear on another thing: you will not be penalized if you duplicate your content and place it within the same site on tag, archive and category pages. This is called internal duplication. It doesn\’t make sense to penalize you for stealing your own property.

A common black hat SEO practice is posting the same content on several pages across different websites in an attempt to improve page views. Sites with duplicate content run the risk of getting removed from the SERPs, or at the very least, plummeting to the bottom of the results.

Publish unique articles only. If someone steals your content, notify Google, and check the Internet regularly for sites that scraped your content.

Let\’s be clear on another thing: you will not be penalized if you duplicate your content and place it within the same site on tag, archive and category pages. This is called internal duplication. It doesn\’t make sense to penalize you for stealing your own property.

2. Cloaking

Cloaking is a faster way to get your site devalued. This practice involves showing a certain version of your site to search engines, and a different version to users. The goal is to improve your site\’s search engine position or to trick people into visiting a malicious website. An example of cloaking is promoting dog training for a website, then sending visitors to a pornographic site or phishing pages. The search engine should have access to all your pages because hiding them means a devastating penalty if ever those pages are discovered on your site.3. Doorway Pages

Doorway pages use cloaking tactics, but they\’re tricky to detect because they\’re made to look like landing pages. To illustrate, think of a white hat landing page for a blog showing snippets of original blog posts. A doorway page wouldn\’t post original content and send visitors to spammy content, like a website selling Viagra. A doorway page is another easy way to get yourself banned. Affiliate links may also get you into trouble. Be careful using landing pages to post affiliate links.4. Keyword Stuffing

Source

Some site owners and webmasters unintentionally stuff their pages with keywords, particularly newbie SEO practitioners. They focus on on-page SEO too much that they forget about Google\’s rules.

Google always puts user experience first, improving their search engine for Internet users instead of marketers. That\’s the idea behind the latest algorithm updates and the \”no stuffing\” rule. Keyword stuffing tends to make an article sound sloppy and ill-written.

Things have changed, and the acceptable density is now 2%, whereas it used to be 3-4% use of keywords for every article. Also, latent semantic indexing (LSI) allows Google to look at the meaning of content instead of blindly crawling the Web for keywords. This means if you sprinkle your pages with synonyms of your keywords, you won\’t have to practice stuffing.

5. Automated Content

I\’m talking about nonsensical content churned out by machines, like automatically spun articles that look like random words haphazardly strewn together.

Some spun articles can pass inspection if they have good quality, like manually spun articles for example. As a webmaster, site owner or SEO expert, it should be simple to tell a well-written article from a jumble of words that don\’t make sense.

What black hat SEOs usually do is scrape existing content, feed it to an automatic spinner, and re-publishing it.

Source

Some site owners and webmasters unintentionally stuff their pages with keywords, particularly newbie SEO practitioners. They focus on on-page SEO too much that they forget about Google\’s rules.

Google always puts user experience first, improving their search engine for Internet users instead of marketers. That\’s the idea behind the latest algorithm updates and the \”no stuffing\” rule. Keyword stuffing tends to make an article sound sloppy and ill-written.

Things have changed, and the acceptable density is now 2%, whereas it used to be 3-4% use of keywords for every article. Also, latent semantic indexing (LSI) allows Google to look at the meaning of content instead of blindly crawling the Web for keywords. This means if you sprinkle your pages with synonyms of your keywords, you won\’t have to practice stuffing.

5. Automated Content

I\’m talking about nonsensical content churned out by machines, like automatically spun articles that look like random words haphazardly strewn together.

Some spun articles can pass inspection if they have good quality, like manually spun articles for example. As a webmaster, site owner or SEO expert, it should be simple to tell a well-written article from a jumble of words that don\’t make sense.

What black hat SEOs usually do is scrape existing content, feed it to an automatic spinner, and re-publishing it.

6. Poor Value Content

Low-quality content in general is more likely to do poorly on Google because that\’s the aim of Panda and Penguin — to weed out low-value pages and prioritize highly substantial content. Even if Google is still hard at work in clamping down these websites, future algorithm changes might make it easier to filter out webpages with thin content.

Low-quality content in general is more likely to do poorly on Google because that\’s the aim of Panda and Penguin — to weed out low-value pages and prioritize highly substantial content. Even if Google is still hard at work in clamping down these websites, future algorithm changes might make it easier to filter out webpages with thin content.

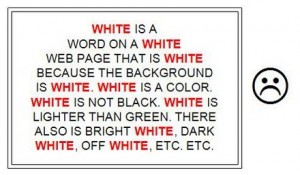

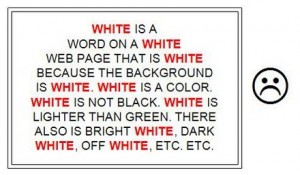

7. Very Tiny Font

Here, black hat SEOs place keywords on the bottom of a webpage and make the font so microscopic in size that the text is invisible to the naked eye. This tactic is just ridiculous because no matter the font size, the spiders will still be able to read the code. Applying this sneaky tactic could get your site de-indexed.8. Invisible Text

This black hat tactic is old school, but many still practice it. Keywords are written in the same color as the site\’s background, thereby hiding those keywords but still ranking for them. It’s crazy to think that someone could actually get away with this tactic because it\’s very easy for search engines (and just about anyone) to spot the hidden keywords. If caught, Google could dump offending sites.

9. Broken Internal Links

Broken links that lead to internal pages also carry the risk of getting the site devalued because malfunctioning links can lead to poor user experience, and Google always has the users\’ best interest at heart. Also, when there are broken links, those pages will not be crawled.10. Hiding Text and Links with CSS, Javascript

Search engine spiders are smarter now — they can understand files that they crawl. Some SEO wrongdoers hide text and links with CSS, Javascript and other file types thinking their devious patterns will remain unseen. This practice for boosting link juice won\’t be as effective as it once was. Worse, it could get your site booted out of the most widely used search engine.11. Thin Content

To represent your site more positively to the search engines, avoid thin content. Thin content happens when you have very minimal content, like when your site is 90% ads and navigation menus and only 10% content. It also happens your content appears on external sites (external duplication). To fix or prevent this, you should make each page original, keep your content to yourself, build up your content, and make each syndicated article unique. If you have too many ads, you can either cut down your number of ads or increase content.12. Malware Infected Site

Websites could get delisted if their HTML source code contains malicious malware code. This may happen if your site gets hacked. One of the worst things that could happen is getting delisted without the awareness that you site\’s been infested. This is why you should update your web server periodically.